SolidityBench is launched by IQ as the first scoreboard to evaluate LLMs in Solidity code generation. available in Embraced faceintroduces two innovative metrics, NaïveJudge and HumanEval for Solidity, designed to evaluate and rank the skill of artificial intelligence models in generating smart contract code.

Developed by IQ BrainDAO As part of its upcoming Code IQ suite, SolidityBench serves to refine its EVMind LLMs and compare them to public, community-created models. IQ Code aims to provide artificial intelligence models designed to generate and audit smart contract code, addressing the growing need for secure and efficient blockchain applications.

As IQ said CryptoSlateNaïveJudge offers a new approach with homework to LLMs by implementing smart contracts based on detailed specifications derived from audited OpenZeppelin contracts. These contracts provide a gold standard for accuracy and efficiency. Generated code is evaluated against the reference implementation using criteria such as functional completeness, adherence to Solidity best practices and security standards, and optimization efficiency.

The evaluation process has leverage Advanced LLMsincluding various versions of OpenAI's GPT-4 and Ghazal Cloud 3.5 as an unbiased code reviewer. They evaluate code based on rigorous criteria, including implementation of all key functions, handling of edge cases, error handling, appropriate use of syntax, and overall code structure and maintainability.

Optimization considerations such as gas efficiency and storage management are also evaluated. Scores range from 0 to 100, providing a comprehensive assessment of performance, security, and efficiency, and reflecting the complexities of developing professional smart contracts.

Which AI models are best for solid smart contract development?

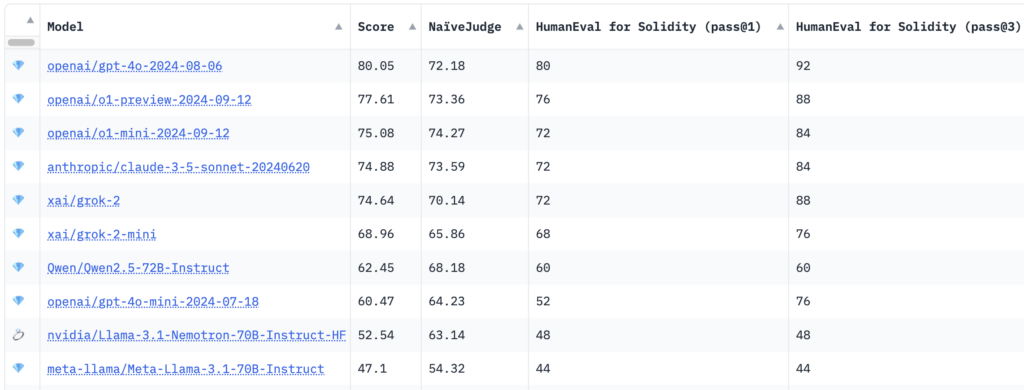

The benchmark results showed that the OpenAI GPT-4o model achieved the highest overall score of 80.05 with NaïveJudge score of 72.18 and HumanEval for Solidity of 80% in pass@1 and 92% in pass@3.

It is interesting that newer reasoning models such as Preview o1 OpenAI and o1-mini lost to first place with 77.61 and 75.08 respectively. Anthropic and XAI models, including the Claude 3.5 Sonnet and grok-2, showed competitive performance with overall scores around 74.

Based on IQ, HumanEval for Solidity adapts the original OpenAI HumanEval benchmark from Python to Solidity, which includes 25 tasks of varying difficulty. Each task includes corresponding tests compatible with Hardhat, a popular Ethereum development environment that facilitates the compilation and testing of generated code. The evaluation criteria, pass@1 and pass@3, measure the model's success in initial and multiple attempts and provide insights into its accuracy and problem-solving capabilities.

The goals of using artificial intelligence models in the development of smart contracts

By introducing these criteria, SolidityBench seeks to develop smart contracts with the help of artificial intelligence. It encourages the creation of more advanced and reliable AI models while providing developers and researchers with valuable insights into the current capabilities and limitations of AI in Solidity development.

This benchmarking toolkit aims to enhance EVMind's LLMs IQ Code and also set new standards for AI-assisted smart contract development across the blockchain ecosystem. This initiative hopes to address a critical need in the industry, where there is a demand for it safe And efficient smart contracts continue to grow.

Developers, researchers, and AI enthusiasts are invited to review and contribute to SolidityBench, which aims to continuously improve AI models, promote best practices, and advance decentralized applications.

Visit SolidityBench Scoreboard at Hugging Face to learn more and start benchmarking Solidity production models.